We will know less and less what it means to be human – José Saramago

The idea of thinking machines is as old as thinking itself. The quest to create artificial life and machines with human-like abilities has deep roots in human culture and history. From mythological figures from the times of ancient Greece like Galatea, Talos, and Pandora to Ada Lovelace’s description of Babbage’s engine, from Hobbes’s description of thinking in logical terms to Leibniz’s universal language, and from Mary Shelley’s Frankenstein to Turing’s machine and his famous test, we see the desire to seek our intelligence in entities outside ourselves.1 However, it was not until 1955, when four scientists wrote the proposal for the “Dartmouth College Summer Research Project” that these ideas felt tangible.

The project proposed that two months were enough to discover the recipe for intelligence.2 This pivotal moment occurred at Dartmouth College’s mathematics department, where a selected group of ten scientists gathered with the mission to discover “how to make machines use language, form abstractions, and concepts, solve the kind of problems now reserved to humans, and improve themselves”.3 It is often said that this gathering has marked the birth of a new field that is now changing the world: The new field of “Artificial Intelligence.”4

Since its inception, AI has been a highly metaphorical field. As a technoscientific discipline that sits between the brain sciences and engineering, AI researchers freely borrowed terms from neighbouring disciplines, like psychology, in order to conceptualize its newly developed techniques and applications.5 Terms like “learning,” “understanding,” “thinking,” “reasoning,” and even the name of the field itself – “Artificial Intelligence” – can be considered as big metaphors that embody the analogy between humans, or in a broader sense, the living and machines.6 However, metaphors, as fundamental linguistic resources for understanding complex concepts, can also be damaging. By driving attention to specific elements of the studied phenomena, metaphors can obscure others. They can also be “double-edged swords” when we forget their metaphorical nature.7 This forgetfulness, which leads to a literal ascription of the metaphor, can constrain our understanding of the studied phenomena and promote rejecting alternative frameworks.8

In the seventy years since AI’s inception, the progression of advanced technologies like ChatGPT has transformed what were once metaphors into literal ascriptions of human cognitive abilities to machines. Today, when media and researchers discuss these models’ capabilities and talk about “learning” about the world or “understanding” language, the implications are often literal, not metaphorical or analogical.

For instance, a recent OpenAI blog post introducing the new version of GPT suggested that “ChatGPT can now see, hear, and speak.” This, however, means that the model has been enhanced to process visual data, transcribe and interpret speech, and generate audio outputs instead of just text.9 Similarly, in research, there are claims that AI systems show “sparks” of “general intelligence”, are “hallucinating”, or even making progress towards emotional experiences like pain and joy.10 These assertions suggest a literal interpretation of the psychological terms that once were purely metaphorical, indicating a significant shift in technology and our understanding of it.

This way of referring to AI systems is misleading at best and dangerous at worst. AI systems do not “see,” “hear,” or “speak” and are not on their way to developing consciousness. Believing otherwise sets unrealistic expectations and creates confusion, hindering our ability to deal with the ethical and practical challenges posed by technology.11 For instance, depicting AI systems as surpassing humans in intelligence and rationality may lead users to over-trust them and employ them in high-stakes scenarios where they can exacerbate social inequalities, and their mistakes can cause significant harm. Equally concerning is the bi-directionality of these metaphors. Understanding technology in human and life-like terms paves the way for conceptualizing humans using technological terms. In other words, describing machines with human-like qualities may lead to characterizing humans in machine-like terms. This trend is problematic; it risks reducing the complexity and richness of human experience into mere calculating machines, diminishing our grasp of human nature and neglecting essential elements of the human experience.

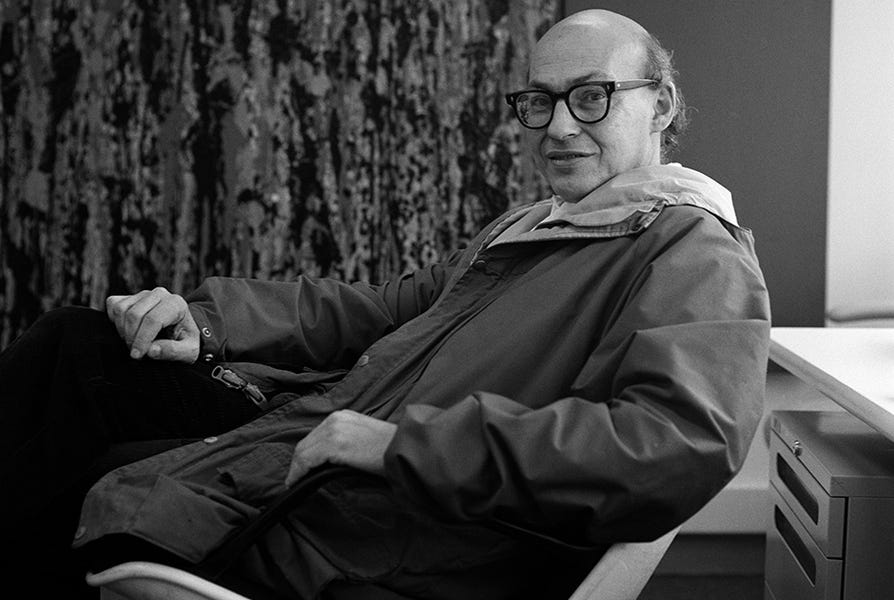

Marvin Minsky (1927 – 2016), one of the founders of AI and co-author of the Dartmouth College project proposal, was a very influential figure in the field. Interestingly, one can find in his early work an example of the transition from metaphorical language to a literal description of human capacities in machines.

From an early age, he showed a fascination with the problem of intelligence, and, as a physics undergraduate, he delved into the realms of early cybernetics and mathematical biophysics. His transformative encounter with McCulloch and Walter Pitts’ paper on the perceptron, the first building block of contemporary neural networks, fuelled his vision of the brain as analogous to electronic technologies like radios and televisions.12

In order to test whether machines could “learn,” Minsky, in 1951, developed the world’s first neural network simulator: the Stochastic Neural-Analogue Reinforcement Computer (SNARC).13 The results were thrilling: the SNARC demonstrated how a sophisticated electronic network could manage redundancy in a manner analogous to the human brain. The device continued to work towards its goal via reinforcement even with fuses blown, and wires pulled, an achievement that provided an empirical basis for a theory that Von Neumann had postulated but never operationalized.14 On a more philosophical note, the SNARC, due to its results, legitimized a key aspect of the metaphor between natural and artificial information processing systems. Heartened, Minsky wrote, “There is no evident limit to the degree of complexity of behavior that may be acquired by such a system.”15

Despite Minsky’s excitement due to his results, he was careful with his use of language when describing his achievements – the: ‘behavior’ of his ‘brain model’ and its ‘plausible’ ability to ‘consider’ or ‘learn’ actions were under quotation marks, emphasizing the metaphorical nature of the use of these terms when referring to his models.16 Other researchers like Claude Shannon and Von Neumann shared this caution. While they accepted the analogies and metaphors linking psychological concepts to computers, they considered the assertion of complete equivalence between these terms and computer functionalities overly ambitious and bold.17 For instance, in his last work, Von Neumann focused on highlighting the parallels between neurons in the human brain and the vacuum tubes used in computers at the time. However, he also emphasized the limitation of this comparison.18

The shift came in 1955 and 1956, as Minsky’s research matured. The once-metaphorical term “learning” sheds its quotation marks, shifting towards a literal description of machine capabilities.19 “Learning” became learning, and with this shift, he validated a different way of talking about these models. Some argue, like Penn in his PhD dissertation, that this shift had economic reasons. He points to the fact that, for example, only a decade later, the MIT Artificial Intelligence Laboratory – scare quotes free – was fully operational with a budget of millions.20 About this point, I declare myself agnostic. Whether this is the main reason or not, I believe that more importantly, this shows a case in which metaphorical language transitioned to the literal ascription of these properties to machines. Even if the economic reasons are not true, this undoubtedly marked a shift in the perception and expectations surrounding AI. The evolution from “learning” to learning, as a metaphor to a literal description indicates a broader acceptance and understanding of the potential of AI. It might reflect the progress from conceptualizing AI as an abstract, future possibility to recognizing it as a tangible technology with real-world capabilities. This change in language around AI is not just a matter of semantics; it signifies a deeper evolution in the field, influencing both the understanding of technology and ourselves.

This trend of describing machines in human-like ways continued to evolve, as shown before, mirroring broader societal and technological changes. As we navigate the current landscape where LLMs like GPT-4 are often referred to with anthropomorphic language, it’s crucial to understand the roots of this perspective. The whipping of the quotes marked a pivotal shift. When the terms “thinking” became thinking, “understanding” became understanding, and “intelligence” became intelligence, the line between humans and machines, like the quotes, began to be erased.

As we confront the challenges and implications of this blurred line, revisiting the use of metaphorical language in AI becomes relevant. We should try to find Minky’s missing quotes and put them back to terms like thinking, understanding, and intelligence. The difference between humans and machines can be in those quotes.

- Goodfellow, I., Bengio, Y., & Courville, A., Deep learning (MIT Press, 2016), available at: http://www.deeplearningbook.org. Nilsson, N. J., The quest for artificial intelligence (Cambridge University Press, 2009). ↩︎

- Summerfield, C., Natural general intelligence: How understanding the brain can help us build AI (Oxford University Press, 2023). ↩︎

- McCarthy, J., Minsky, M. L., Rochester, N., Shannon, C. E., “A Proposal for the Dartmouth Summer Research Project on Artificial Intelligence, August 31, 1955,” AI Magazine, 27, no. 4 (2006): 12, https://doi.org/10.1609/aimag.v27i4.1904. ↩︎

- Summerfield, Natural general intelligence. ↩︎

- Floridi, L., Nobre, A.C., “Anthropomorphising machines and computerising minds: the crosswiring of languages between Artificial Intelligence and Brain & Cognitive Sciences,” Centre for Digital Ethics (2024), https://dx.doi.org/10.2139/ssrn.4738331. ↩︎

- Möck, L.A., “Prediction Promises: Towards a Metaphorology of Artificial Intelligence,” Journal of Aesthetics and Phenomenology, 9, no.2 (2022): 119-139, https://doi.org/10.1080/20539320.2022.2143654. ↩︎

- Birhane, A.,”The Impossibility of Automating Ambiguity,” Artif Life 2021; 27, no. 1, (2021): 44–61, https://doi.org/10.1162/artl_a_00336. Möck, “Prediction Promises”. ↩︎

- Watson, D., “The Rhetoric and Reality of Anthropomorphism in Artificial Intelligence,” Minds & Machines, 29 (2019): 417–440, https://doi.org/10.1007/s11023-019-09506-6. ↩︎

- Barrow, N., “Anthropomorphism and AI Hype,” AI and Ethics (forthcoming). ↩︎

- Bubeck, S. et al., “Sparks of Artificial General Intelligence: Early experiments with GPT-4,” arXiv (2023), https://doi.org/10.48550/arXiv.2303.12712. OpenAI, “Consciousness in artificial intelligence: Insights from the science of consciousness,” arXiv (2023), https://doi.org/10.48550/arXiv.2303.08774. Butlin, P. et al., “Consciousness in artificial intelligence: Insights from the science of consciousness,” arXiv (2023), https://doi.org/10.48550/arXiv.2308.08708.

↩︎ - Watson, “The Rhetoric and Reality of Anthropomorphism”. ↩︎

- Minsky, M. L., Solomon, C. (ed.), Xiao, X. (ed.), Inventive Minds: Marvin Minsky on Education (Penguin Random House 2019). ↩︎

- Penn, J., “Inventing Intelligence: On the History of Complex Information Processing and Artificial Intelligence in the United States in the Mid-Twentieth Century” (PhD diss., University of Cambridge, 2020), https://doi.org/10.17863/CAM.63087. ↩︎

- Minsky, M., (1954). “Theory of neural-analog reinforcement systems and its application to the brain-model problem” (PhD diss., Princeton University, 1954). ↩︎

- Penn, “Inventing Intelligence,” 167. ↩︎

- Minsky, “Theory of neural-analog reinforcement systems”. ↩︎

- Penn, “Inventing Intelligence”. ↩︎

- Gigerenzer G., Goldstein D. G., “Mind as Computer: Birth of a Metaphor,” Creativity Research Journal, 9:2-3 (1994): 131-144, https://doi.org/10.1080/10400419.1996.9651168. ↩︎

- Penn, “Inventing Intelligence”. ↩︎

- Ibid. ↩︎